Strange Loop 2019 - Explainable AI: the apex of human and machine learning

Overview

Black Box AI technologies like Deep Learning have seen great success in domains like ad delivery, speech recognition, and image classification; and have even defeated the world's best human players in Go, Starcraft, and DOTA. As a result, adoption of these technologies has skyrocketed. But as employment of Black Box AI increases in safety-intensive and scientific domains, we are learning hard lessons about their limitations: they go wrong unexpectedly and are difficult to diagnose.

This talk is about safe AI

AI has become ubiquitous in our lives.

It takes on tasks : Ads, Recommendations, Image processing and Games. Common features of these tasks are that data are nearly free and unlimited , action takes priority over learning, and there is little/no cost of failure. AI used for these is inefficient(requires incredible amounts of data), opaque (hides its knowledge away), and brittle (can fail unexpectedly and catastrophically).

For tasks in Biotech, Public Health, Agriculture and Defense/Intelligence , the common features are that data are scarce and expensive, action is dependent on learning, and failure costs lives.

However, AI meant for benign tasks is being adapted for high risk tasks.

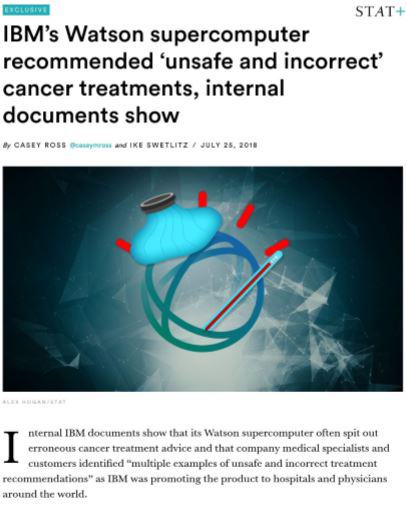

Baxter gave recent examples from Uber's self driving car and IBM Watson

How do we make Ai safe when safety matters?

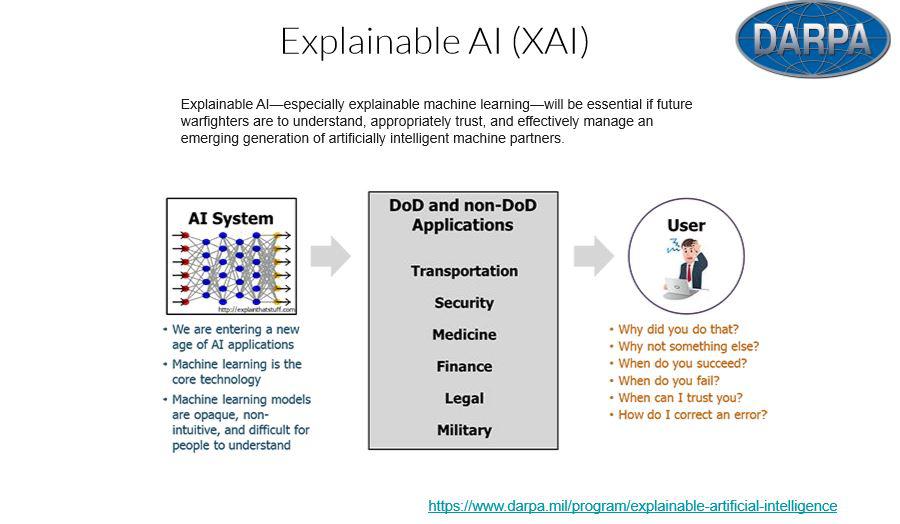

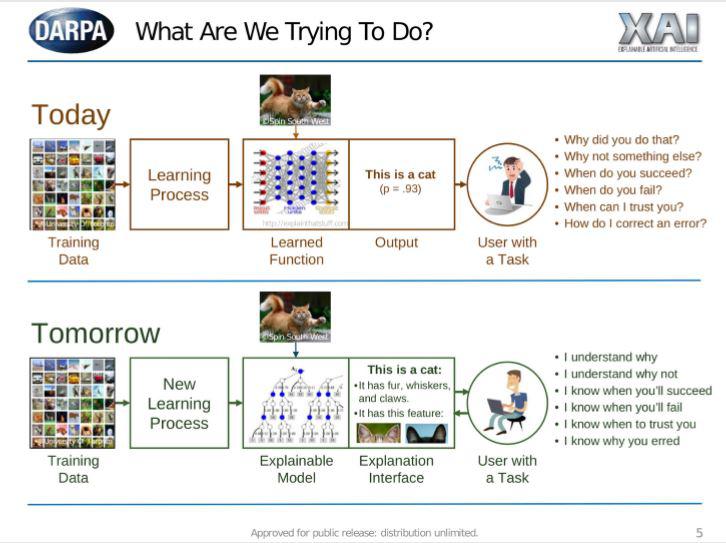

One possible way is Explainable AI (XAI)

There are questions we need to be ask the Ai.

So we need an Explanation Interface

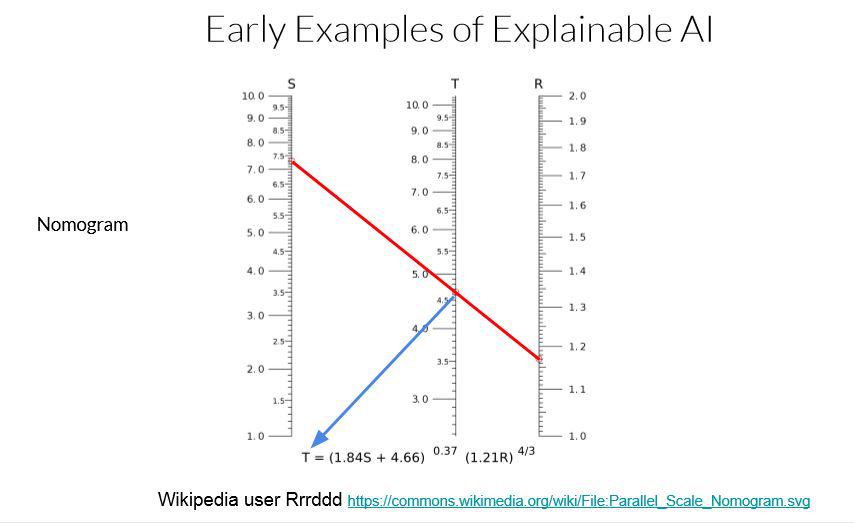

Explainable AI has been around long before it became a buzz word. Baxter shows a Nomogram image from years before. Another example are Expert systems. They became popular starting from the 80s.

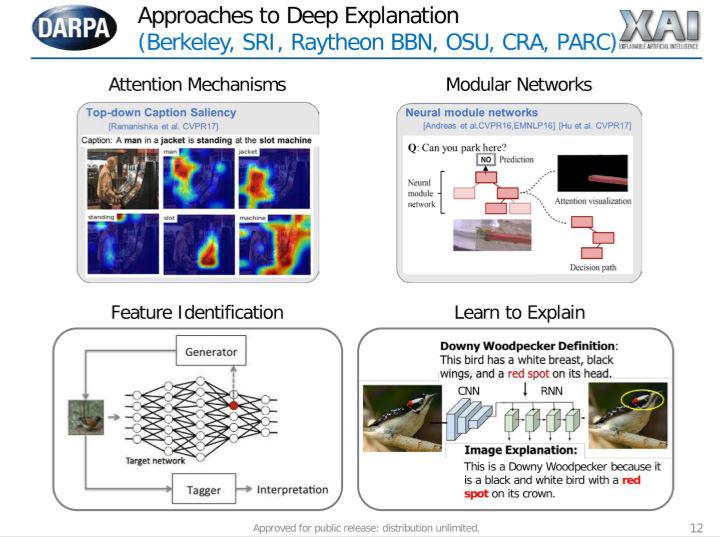

Recent examples of explainable AI: Most of the work has been in deep learning focused on image classification. Baxter discussed examples from recent papers. There are also approaches using heat maps. We can also do the same thing with text

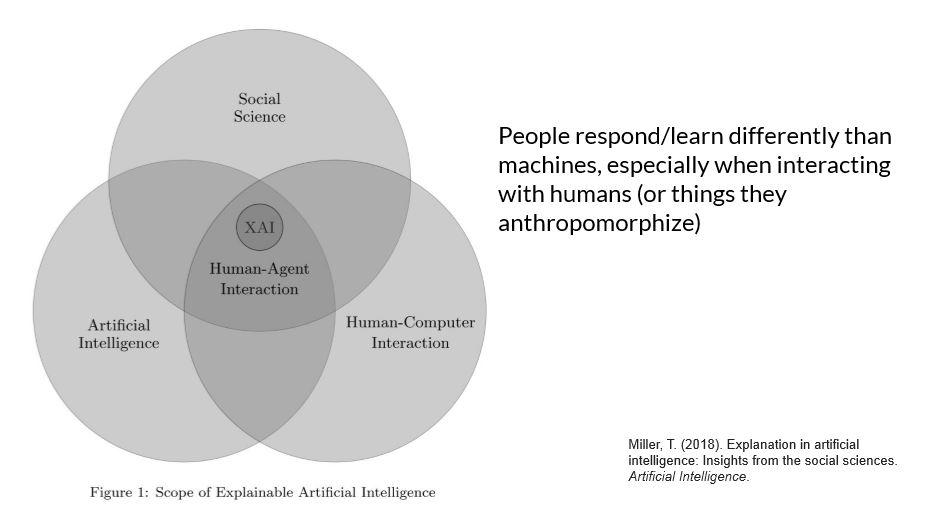

XAI work is mostly focused on explanation but there are problems with explanation.

- XAI is (mostly) built for computer scientists by computer scientists . Most explanation is written by computer scientists

- Explanation does not actually solve the problem. Explanations don’t prevent problems, they offer excuses as to why they happen

- Explanation is not really knowledge. interpretable > explainable

- Explanation makes 'inappropriate trust' worse. However people are biased to trust. Because more trust means faster learning. However DNNs are brittle and unpredictable and we really should not be trusting them.

How do we fix these problems? Baxter says he does not know how to solve all of the problems but we can solve most by : Embed machine knowledge into the human mind

How do we do this? Teach!

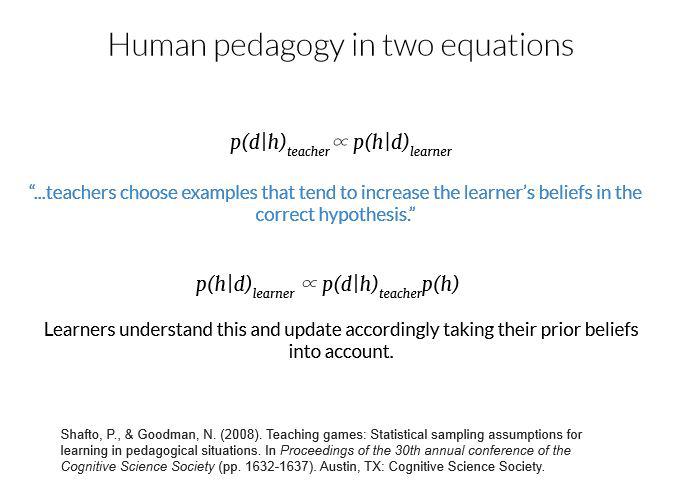

Baxter talks about the Rectangle Game and another experiment teaching image categories

We did have some success in transferring some knowledge that was machine knowledge in to humans.

The problem with this is that its's is recursive maths and teaching is harder than learning.

This sort of teaching helps people recover machine knowledge but only if the knowledge is a psychologically valid format

- There is scaling work to do

- Baxter wraps up

- XAI encompasses more than explanation, it is about making things safer. We need to focus more on interpretable models

- There is still a long way to go.

- DARPA alone has dedicated 1. illion Dollars to work in this area.

Q&A

- How do with XAI if it is performing worse than non-explainable AI?

There have been ways of adding uncertainty to Deep Learning. Sometimes it might be better to just admit you do not know that pretend you use. Of course it is on a case-by case basis. - Since you said uncertainty is quantifiable, is there any more work being done in AI in terms of making it more resilient, more fragile?

I think it is more abort become more aware of the uncertainty and know what to do. - Are there other methods to achieve safe AI apart from XAI?

I think one other method is responsibility. However that has ethical considerations. - Is there any research into having AI introspect about its decision and use that instead of having humans introspect based on learning about how the AI work?

Baxter discussed an upcoming DARPA project that might be focusing on that. - Is part of the problem that we are not treating this as the statistical process that they are?

You can get uncertainty from a neural network but it does not really make sense. We need to have systems that there uncertainty kind of maps what is going on in the world.